bagging predictors. machine learning

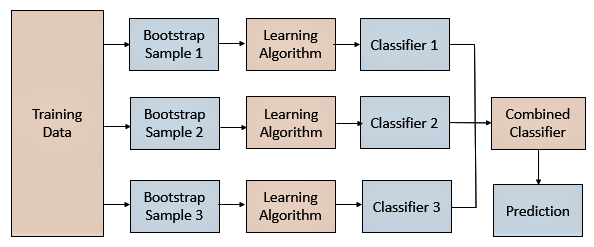

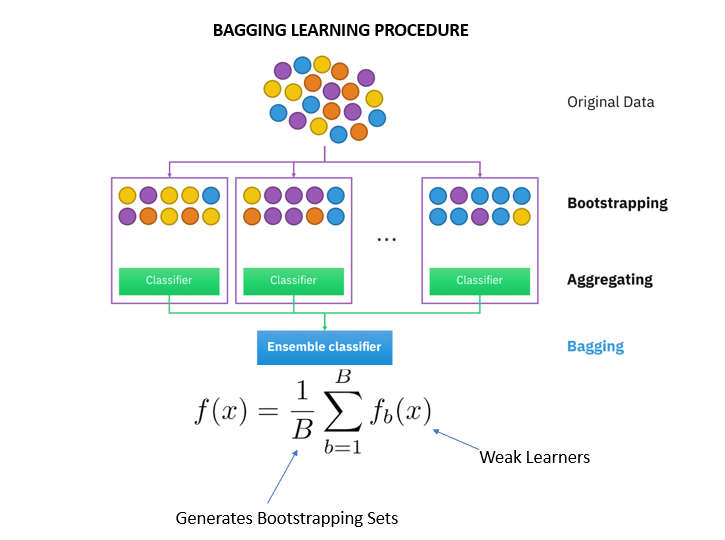

Bootstrap aggregating also called bagging from b ootstrap agg regat ing is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms used in statistical classification and regression. Bagging tries to solve the over-fitting problem.

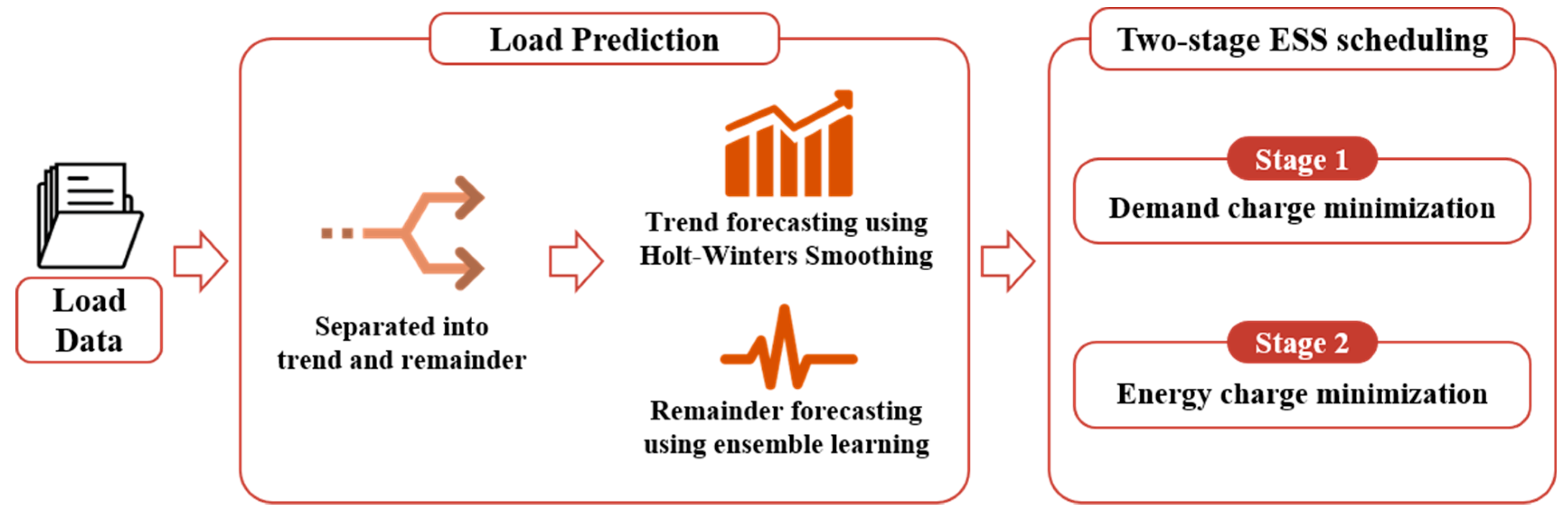

Processes Free Full Text Development Of A Two Stage Ess Scheduling Model For Cost Minimization Using Machine Learning Based Load Prediction Techniques Html

In this post you will discover the Bagging ensemble algorithm and the Random Forest algorithm for predictive modeling.

. This paper presents a new Abstract - Add to MetaCart. Hence many weak models are combined to form a better model. If perturbing the learning set can cause significant changes in the predictor constructed then bagging can improve accuracy.

It is a type of ensemble machine learning algorithm called Bootstrap Aggregation or bagging. The bagging algorithm builds N trees in parallel with N randomly generated datasets with. Boosting tries to reduce bias.

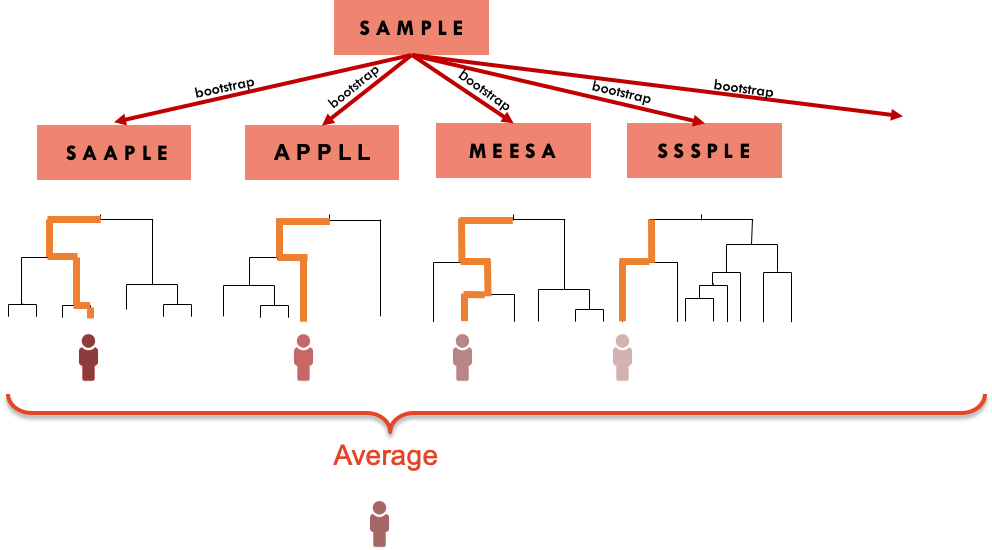

If perturbing the learning set can cause significant changes in the predictor constructed then bagging can improve accuracy. Regression trees and subset selection in linear regression show that bagging can give substantial gains in accuracy. This chapter illustrates how we can use bootstrapping to create an ensemble of predictions.

The combination of multiple predictors decreases variance increasing stability. Regression trees and subset selection in linear regression show that bagging can give substantial gains in accuracy. By clicking downloada new tab will open to start the export process.

Machine Learning Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor. Ensemble methods improve model precision by using a group of models which when combined outperform individual models when used separately. The vital element is the instability of the prediction method.

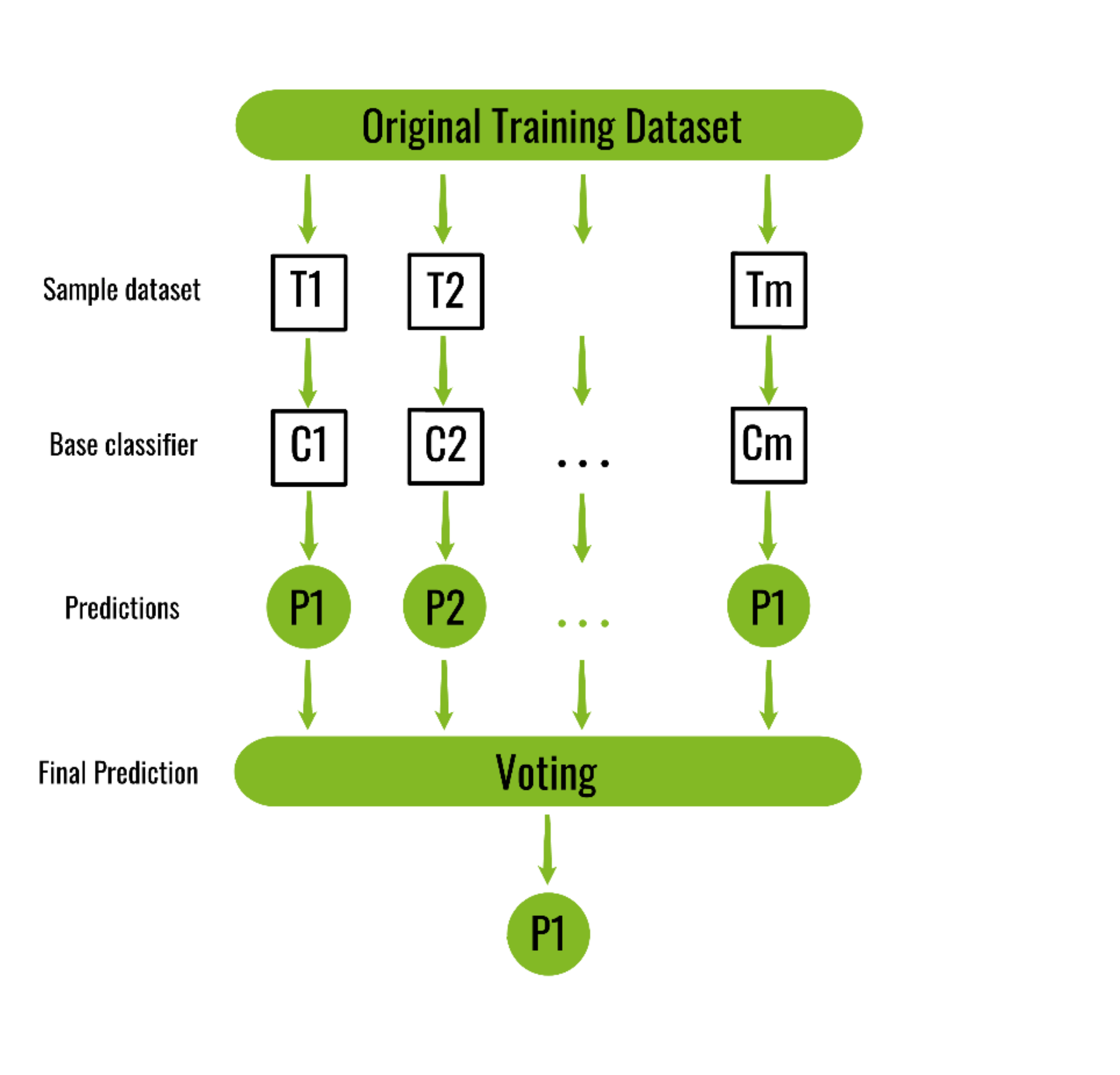

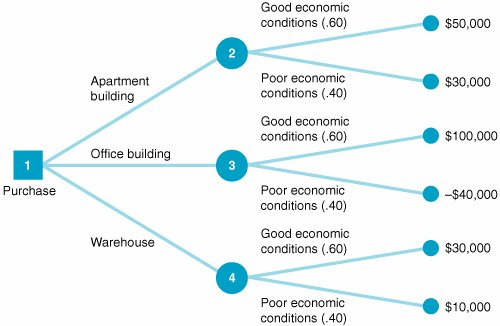

The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. Random Forest is one of the most popular and most powerful machine learning algorithms. Bagging is a powerful ensemble method that helps to reduce variance and by extension prevent overfitting.

Machine Learning 24 123140 1996. Bootstrap aggregating also called bagging is one of the first ensemble algorithms. If the classifier is stable and simple high bias the apply boosting.

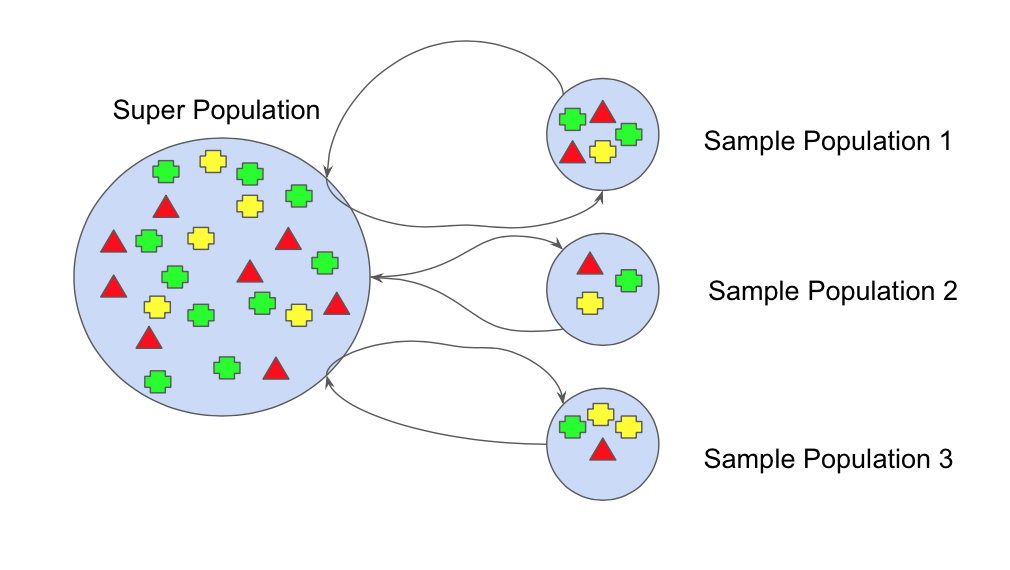

In Section 242 we learned about bootstrapping as a resampling procedure which creates b new bootstrap samples by drawing samples with replacement of the original training data. The ultiple m ersions v are formed y b making b o otstrap replicates of the. Bagging is a Parallel ensemble method where every model is constructed independently.

The diversity of the members of an ensemble is known to be an important factor in determining its generalization error. Aggregation in Bagging refers to a technique that combines all possible outcomes of the prediction and randomizes the outcome. Bagging and Boosting are two ways of combining classifiers.

The Random forest model uses Bagging. Ensemble methods like bagging and boosting that combine the decisions of multiple hypotheses are some of the strongest existing machine learning methods. The results show that the research method of clustering before prediction can improve prediction accuracy.

If the classifier is unstable high variance then apply bagging. The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. We see that both the Bagged and Subagged predictor outperform a single tree in terms of MSPE.

For a subsampling fraction of approximately 05 Subagging achieves nearly the same prediction performance as Bagging while coming at a lower computational cost. Customer churn prediction was carried out using AdaBoost classification and BP neural network techniques. Bagging is usually applied where the classifier is unstable and has a high variance.

Bagging Predictors By Leo Breiman Technical Report No. After reading this post you will know about. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor.

Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor. The vital element is the instability of the prediction method. 421 September 1994 Partially supported by NSF grant DMS-9212419 Department of Statistics University of California Berkeley California 94720.

The process may takea few minutes but once it finishes a file will be downloaded on your browser soplease do not close the new tab. Bagging is used when the aim is to reduce variance. Up to 10 cash back Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor.

They are able to convert a weak classifier into a very powerful one just averaging multiple individual weak predictors. Important customer groups can also be determined based on customer behavior and temporal data. The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class.

The results of repeated tenfold cross-validation experiments for predicting the QLS and GAF functional outcome of schizophrenia with clinical symptom scales using machine learning predictors such as the bagging ensemble model with feature selection the bagging ensemble model MFNNs SVM linear regression and random forests. It also reduces variance and helps to avoid overfitting. The aggregation v- a erages er v o the ersions v when predicting a umerical n outcome and do es y pluralit ote v when predicting a class.

The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. Model ensembles are a very effective way of reducing prediction errors. Bagging predictors is a metho d for generating ultiple m ersions v of a pre-dictor and using these to get an aggregated predictor.

Ensemble Learning Bagging And Boosting In Machine Learning Pianalytix Machine Learning

Bagging And Pasting In Machine Learning Data Science Python

An Introduction To Bagging In Machine Learning Statology

Ensemble Machine Learning Explained In Simple Terms

Https Www Dezyre Com Article Top 10 Machine Learning Algorithms 202 Machine Learning Algorithm Decision Tree

What Machine Learning Algorithms Benefit Most From Bagging Quora

Ml Bagging Classifier Geeksforgeeks

Ensemble Learning Algorithms Jc Chouinard

Bagging Bootstrap Aggregation Overview How It Works Advantages

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Random Forest Algorithm In Machine Learning Great Learning

Ensemble Learning Explained Part 1 By Vignesh Madanan Medium

2 Bagging Machine Learning For Biostatistics

Bagging Classifier Instead Of Running Various Models On A By Pedro Meira Time To Work Medium

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Ensemble Methods In Machine Learning What Are They And Why Use Them By Evan Lutins Towards Data Science

Bagging Machine Learning Through Visuals 1 What Is Bagging Ensemble Learning By Amey Naik Machine Learning Through Visuals Medium